Features

Graphite service scaled out using built-in sharding and replication capabilities of the carbon component.

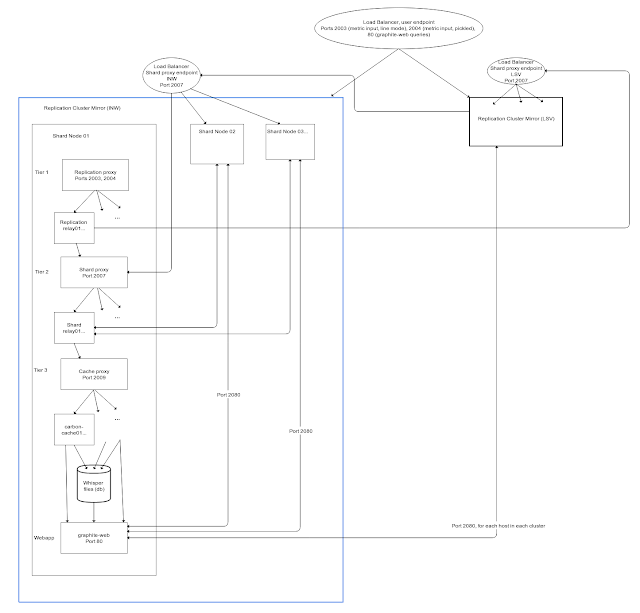

Two identical clusters will be replicated among separate geographical sites and both will be available to receive incoming metrics and serve queries (master-master). Within each cluster, data are sharded among each of the hosts in the cluster, such that each host has identical capabilities. This configuration provides the following features:

- Increased capacity with the ability to scale in the future.

- Data redundancy tolerating individual server downtime.

- BCP - continued availability of service in the event of a complete site failure with no action required during the outage. Post-recovery steps should be done to sync whisper files to failed site with missing metrics.

Architecture

Each host in each cluster runs three tiers of metric routing for processing incoming metric data. At each tier, HAProxy is used to load-balance among a set of local carbon cache/relay daemons. Multiple carbon daemons are employed at each level to maximize utilization of multiple CPU cores/threads, thus the purpose of HAProxy at each level.

- Tier 1, replication: HAProxy distributes port 2003, 2004 (line-formatted and pickled formatted, accordingly) traffic originating from a data source (client API) to the carbon-relay daemons. Relays at this level are responsible for replicating metrics across sites (mirroring) by forwarding a copy of each metric locally to Tier 2 and remotely to a load-balanced VIP representing Tier 2 of each mirror.

- Tier 2, sharding: HAProxy distributes port 2007 traffic consisting of replicated metrics to the carbon-relay daemons responsible for sharding metrics across all hosts of a site (cluster). Relays at this level determines the proper destination host for each metric and forward the metrics along to Tier 3 of that host accordingly (local or remote).

- Tier 3, local relay fanout: HAProxy distributes port 2009 sharded traffic, directed by Tier 2 to the carbon-relay daemons responsible for sharding locally among a set of carbon-cache daemons. Fanout at this level is simply for the purpose of maximizing utilization of multiple CPU cores/threads on the server. The carbon-cache daemons finally write metrics to whisper database files, each responsible for its own sharded set of metrics.